ServerlessConf Paris : what about Function as a Service performance?

The leading global conference devoted to the serverless trend, Serverlessconf is a community conference that aims to share experiences about developing applications for so-called serverless architectures. Serverlessconf will take place in Paris on 14 and 15 February. Today we choose to present you one of the inspiring speakers that will join ServerlessConf, Avner Braverman, and talk about Serverless functions performance.

Avner Braverman

Co-founder & CEO at Binaris, Avner has been working with distributed operating systems since his school days. He co-founded XIV, a distributed storage company, Parallel Machines, a high-performance analytics company, and recently Binaris, a high-performance serverless company. Avner’s “full stack” ranges from silicon architecture, through kernel design and up to JavaScript applications.

Could you present us Binaris?

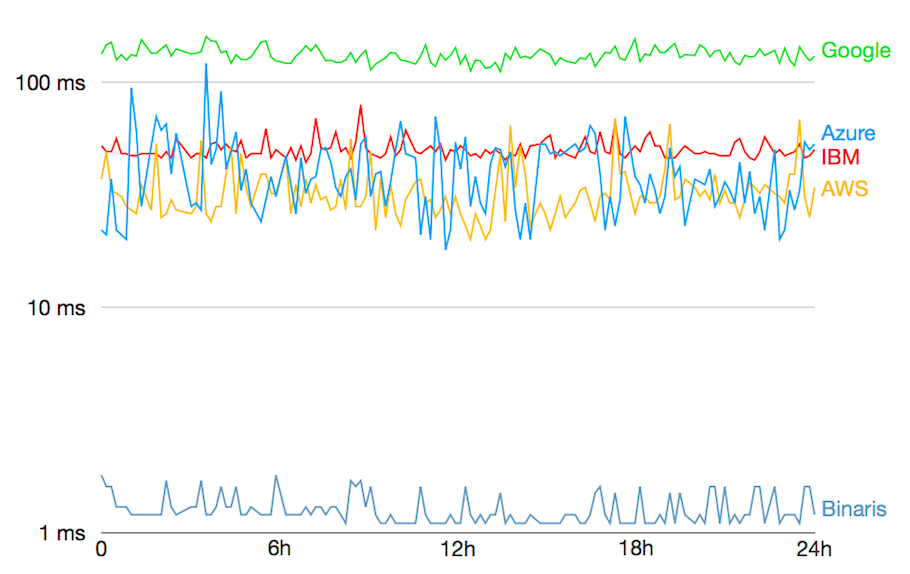

Our goal at Binaris is basically to take the value proposition of Function as as Service (fast development, never have to think of servers…) and bring it into core application development. Our point of view is that today Functions as a Service is limited in the use-cases that it addresses. It’s very good for data processing, ETL, individual events, but if you need to build something more responsive, like interactive or real-time applications, you cannot use FaaS. The other limitation is that the logic has to be very simple: there’s an event, the function does something, and then returns. If you want to build more complex logic, where functions invoke other functions, or more complex networks of functions, latency accumulates and can quickly climb to seconds. FaaS platforms today are just too slow for many applications. So what we are doing is building a super fast serverless platform. We have our own platform service running on AWS cloud; basically, we are buying EC2 machines from AWS and we are selling a platform that makes your functions runs way faster. If Lambda takes 50 to 100 milliseconds to fire up a function (warm latency), our platform is 1 to 3 milliseconds today, and is going to be even faster in the future. It really lets you build a network of microservices, a network of functions where you don’t have to think about latency. We bring FaaS into use-cases where you need more responsiveness: advertising, gaming, interactive website…or future use-cases like connected cars, drones or AI.

How did you come to develop this offer, is this because of your previous experience with serverless?

Binaris started in early 2016, so it was really the beginning of serverless. We actually started looking at the problem from a computer efficiency point of view. Can we make the cloud significantly more efficient and do much more compute per Gigawatt ? With that amount of power in the cloud, how much work can you do ? We were thinking of high performance compute, high performance functions as a service… From the system point of view, as you go up the stack (in terms of abstraction of the infrastructure), it becomes easier and faster to code, you become more agile, but you pay the price in dollars, and you pay the price in performance. Think of an analogy, if you can build an app with C or Python, it is much easier with Python, but response times are slower, and you need more servers to run the same workload. As you abstract more of your stack, it becomes less efficient. So we are challenging this assumption : since FaaS is such a high level abstraction, good for developer productivity, how can we optimize the stack to focus on performance ? So we are starting with latency, because latency is what enables news use-cases for FaaS, but eventually we want to build the whole stack to be significantly more efficient, so it can be faster, and cheaper.

Is the serverless paradigm here to stay?

I think that roughly half of cloud workload is going to be video processing of some kind (recognition, transcoding etc). This is not a good fit for functions. But the whole rest of applications : data analysis, real-time analytics, interactive applications, large control systems… all application logic will shift to functions.

How do you see the evolution of the serverless ecosystem?

I see two layers : at the bottom layer is the platform, which needs be vastly improved. The platform needs to be faster, and richer in the semantics offered to developers. For example : you have 3 functions A, B, C, and you want to these 3 functions to operate as one unit, to fail or to succeed as one unit (in a transaction). For this, the platform needs to have a set of semantics that lets you express that; above the platform level, there will be a whole new set of tools and libraries, that help you build your applications faster. I think there will be less distinction between development and testing and operations. This will become a continuous spectrum, and I also believe that we’ll a have a set of tools that lets us look at applications at different scales. Today we have one set of tools inside the VM, another one between micro-services and with functions there will be no more distinction. We’ll have tools for debugging, architecturing code, monitoring code…function is a key concept that lets us optimize runtimes and enables new set of tools to manage applications faster and deploy faster, and make developers more productive and more efficient, and applications more robust and responsive.

What about AWS Step-Functions to manage multiple functions?

It’s a first step. IBM just released a similar tool called Composer. The visual approach for composing functions is not enough. It’s ok if you have a few functions, but insufficient if you have a lot of microservices, 50, 100 (even thousands in the case of Netflix)…The ease of development functions could make that prolific, you could have more functions than microservices and in that case we will need better tools. We need different visualizations, but also more code oriented tools in order to do so. Think about user interface, we have great tools for user interface, but if you want something that’s richer, more complex, you have to code it, there is no other way around.

What will be the subject of your talk at Serverlessconf?

Our goal is to demonstrate why performance is such a game changer for FaaS. We give a concrete example, and show how performances changes the developer experience. We’ll use a tool we built internally, which lets you run tests automatically in the cloud when you deploy a function. We’ll show a process with an application built out of a number of functions. Each function has a number of unit tests assigned to it, and when you deploy one function, we automatically run all unit and integration tests. Parallelizing those tests is essential if you want to be fast : we want to achieve all these (deploying, testing) in about one second. This is a new step in developer experience, and we’ll show how this is enabled by the concept of FaaS and by high performance FaaS.